Workload discovery on Google Cloud Platform (GCP) is a tool that quickly visualizes GCP cloud workloads. This solution can be utilized to customize and share detailed workload visualizations based on live data from a GCP project. Basically, we are managing GCP inventory with cloud asset API.

This works by maintaining an inventory of the GCP resources across the GCP project and visualizing the cloud assets data using Data Studio.

We are using cloud asset API for the workload discovery. Cloud Asset Inventory supports the following GCP assets:

- Compute engine instances/instance groups/instance templates

- Cloud storage buckets

- Networks/subnetworks, firewalls

- Cloud DNS managed zones, cloud DNS policies

- App engine applications, services, versions

- GCP billing accounts

- IAM policies associated with these resources

- Organizations, folders and projects

- Other compute engine resources like disks, backend buckets, health checks, images and snapshots

Cloud Asset Inventory

Cloud Asset Inventory provides deep and detailed information on resource metadata that can be leveraged to analyze the GCP assets.

The cloud asset API facilitates managing our inventory of GCP resources. We can utilize the API to list, export and delete GCP resources.

To use the cloud asset API, we must have a GCP project and enable the API for our project. This can be done through the GCP Console by searching for ’Cloud Asset API’ from the API Library. Then, click on the API and the 'Enable' button to enable it for our project.

Since the asset inventory API is built on HTTP and JSON, any standard HTTP client can send requests to it and parse the responses.

Cloud Asset Inventory APIs

- Cloud Asset Inventory client libraries

- REST API for Cloud Asset Inventory

- RPC API for Cloud Asset Inventory

- Google Cloud asset for Cloud Asset Inventory

Cloud Asset Inventory client libraries

- ExportAssetsGcs: Exports assets with time and resource types to a given Cloud Storage location

- ExportAssetsBigQuery: Exports assets with time and resource types to a given BigQuery table

- BatchGetAssetsHistory: Batch gets the updated history of assets that overlap a time window

- SearchAllResources: This can also be used to locate resources that are inactive and can probably be deleted/cleaned up, e.g., global or regional addresses that have been reserved but not in use:

- # init request

- request = asset_v1.SearchAllResourcesRequest(

- scope="projects/myproject-123",

- asset_types=[

- "compute.googleapis.com/Address",

- ],

- query="NOT state:in_use",

- )

Solution approach

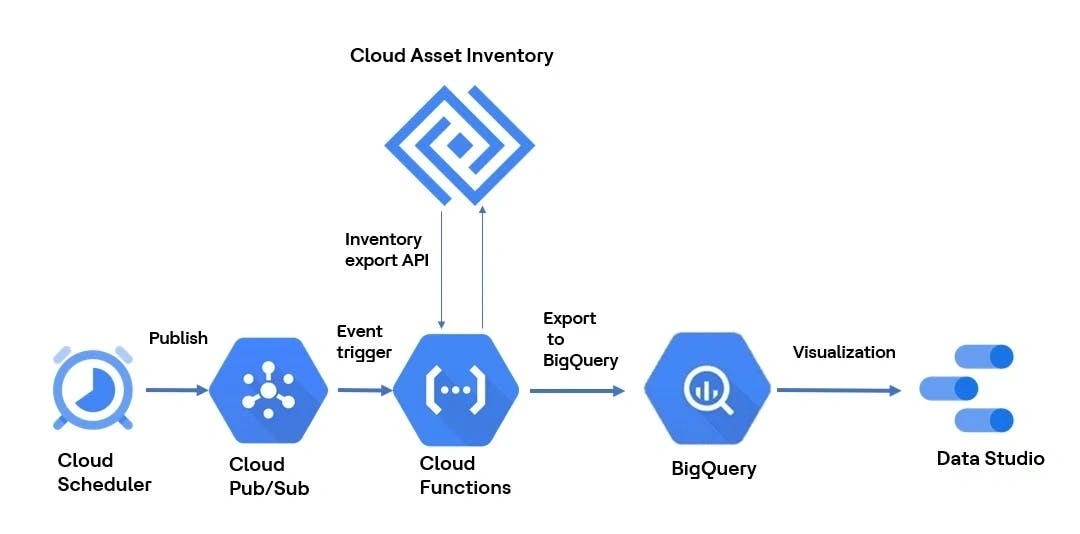

We used the following approach to craft a solution:

- In workload discovery on GCP, we visualize the Cloud Assets data using Data Studio

- We used Cloud Scheduler to trigger a Cloud Function on a regular schedule

- Cloud Function, which in turn is responsible for initiating a Cloud Asset Inventory API bulk export to BigQuery

- Visualizing the BigQuery data in Data Studio.

BigQuery dataset

We need to create a BiqQuery dataset to export the Cloud Asset data

--data_location us-central1 \

--dataset CloudAssetAPIDataSet

Pub/Sub topic

We need to create a Pub/Sub topic that will be used in Pub/Sub trigger

gcloud pubsub topics create cf-cloudasset-trigger

Cloud functions

We need to deploy the cloud function with Google Cloud and set the Pub/Sub topic as the triggering topic

gcloud functions deploy java-export-cloudasset-to-bq\

--runtime Java11 \

--entry-point com.example.Example\

--trigger-topic cf-cloudasset-trigger \

--region us-central1\

--memory 256MB\

--timeout 300

Cloud Scheduler

We need to use Cloud Scheduler for the scheduling in cron format

gcloud scheduler jobs create pubsub cloudasset-export-job \

--location us-central1 \

--schedule "0 */3 * * *" \

--topic cf-cloudasset-trigger \

--message-body-from-file “Asset.json"

Visualizing the data

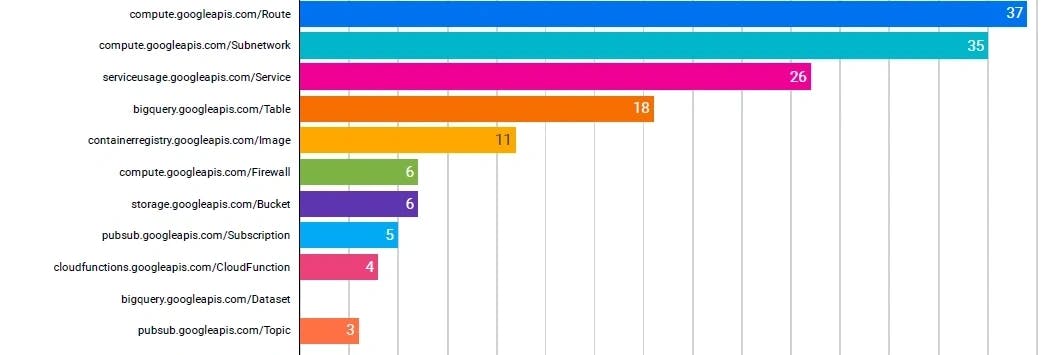

We utilize the Data Studio to visualize the Cloud Assets data being exported to BigQuery. Google Data Studio provides different ways to visualize data, including bar charts, pie charts, line charts, graphs, maps, infographics and tables.

Dashboard containing different provisioned resources with the count:

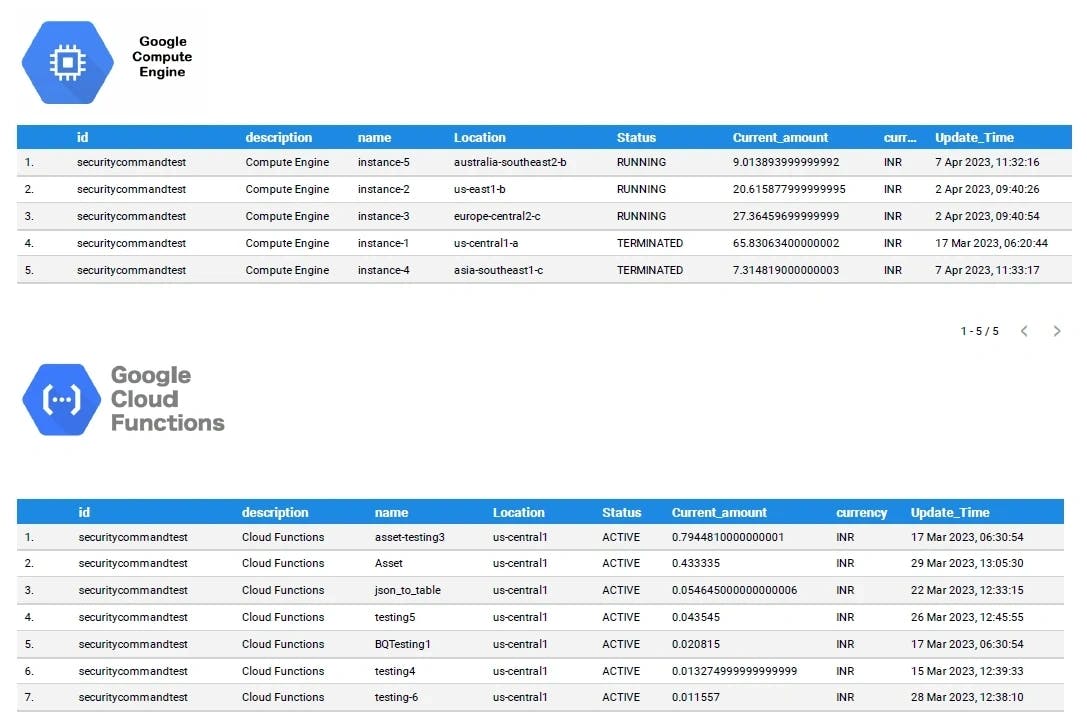

Dashboard containing different provisioned resources with their current status and billing information: