God created humans and they, in turn, created AI algorithms. Neither human decisions nor AI decisions are fully interpretable or explainable. What then, is interpretable and explainable? Per the English dictionary, ‘interpret’ means understand, construe, infer, deduce, decipher, unravel, and so on. ‘Explain’ means to elucidate, describe, enlighten, make it clear, and so on. Both in combination are required to make a meaningful decision.

Like humans, AI will mature to perceive, learn, abstract and reason.

These two words have brought a storm in the world of AI. Advances in AI models has led to the invention of autonomous cars, automated diagnostic, disease detection, smart Q&A systems, intuitive surveillance systems, and so on. The debate is about how AI algorithms can make these decisions and what internal process happen which can be interpreted and explained in layman terms.

While the debate continues, have we been successful in deciphering how humans make decisions or how the brain functions while making these decisions? For the same problem on hand, humans make different decisions and the result of that has led to different kinds of impact on mankind. Brain research has been going on for many years and it will take an infinite number of years to understand and interpret how the brain processes inputs and comes up with different decisions. For example, a patient who is recommended for surgery usually goes for a second and third opinion and then, takes an informed decision for a faster and safer cure. These are all more probabilistic than deterministic. In hindsight, these decisions can be explained with better rationale and data points but not immediately in foresight. Our foresight is ambiguous relative to the hindsight.

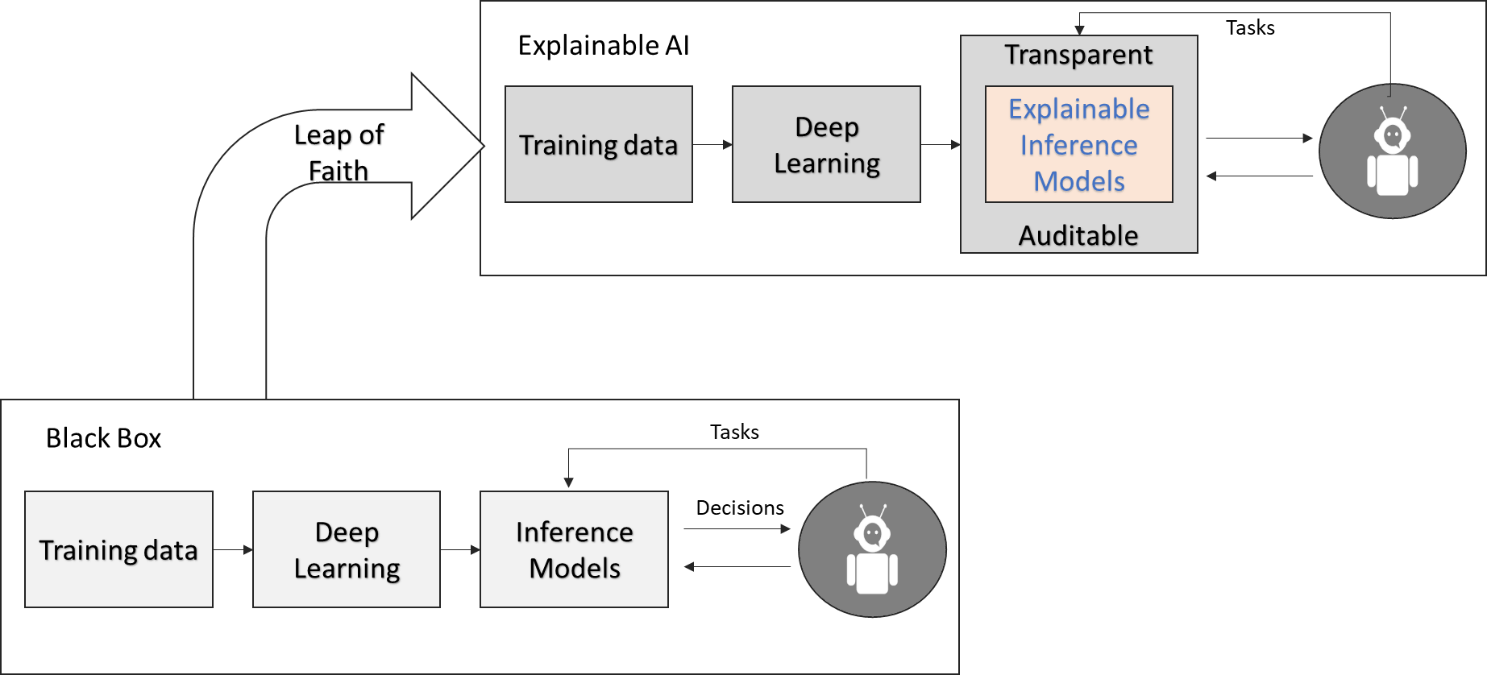

Traditional analytics algorithms like regression and decision trees are easier to explain relative to ensemble algorithms like random forest, XGBoost, etc. The more we endeavor for better accuracy, the less will be the interpretability and explainability. As we move towards deep learning (DL) the explainability further goes for a toss. It is a black box and that’s where the challenge arises. AI and DL models are designed around the brain which consists of billions of neurons that are networked together and activated for making decisions.

Black box vs. explainability schools of thoughts

There are two schools of thought prevailing today on explainable AI. The first school led by start-ups and researchers who are fully focused on advancing current algorithms to improve accuracy, precision, and reducing training time and inferencing time. They are happy with the black box decisioning and more concerned about the end results than understanding how it is being made. Geoff Hinton, the pioneer in the AI world, said, “I am an expert on trying to get the technology to work, not an expert on social policy. One place where I do have technical expertise that’s relevant is whether regulators should insist that you can explain how your AI system works. I think that would be a complete disaster”.

The second school of thought led by regulators, social policy makers and ethical AI proponents want the AI models to be fully opened up, analyze everything under the hood, understand the complete architecture, how feature engineering is done through thousands of epochs, iterations, neurons being triggered through activations, weights and biases being adjusted to optimize the cost function during forward and backward propagation. Explaining the very aspect of this and how a decision is being made through these computations would be practically impossible and could potentially put roadblocks for the current progress being made in this space. Following are some of the scenarios to explore:

- Credit Decisioning: How did the DL model decide to provide or deny credit to an individual? Which key variables influenced the decision and how objective was it? Was there any bias due to ethnicity, race, religion, and any other demographics?

- Surveillance System: How did the model interpret that a particular individual from live streaming is involved in a suspicious activity? Are there any historic biases leading to make such a decisioning?

- Autonomous Vehicles: Decisions to be taken by an autonomous car i.e. saving the life of the passenger in the car or the pedestrian on the road during an accident?

- Healthcare: Data in healthcare industry is highly regulated and systems which store them must be HIPAA compliant. AI solutions are data intensive and limitations of getting data slow down innovation. In a cancer disease prediction, the patients, regulators, and other health service providers will have the same questions: How did the AI system predict that the patient has a grade 3 or grade 4 tumor? Which features influence the decision?

Even when humans are subjected to the abovementioned scenarios, we could get varying results. We still have credit being denied to the deserving, genuine people arrested and later absolved by the judiciary, the increasing number of accidents due to a rise in the complexity on the roads, varying opinions from one doctor to another. We have many laws, policies, guidelines, and enforcement around the world to manage the complex ecosystem. It would be utopian to expect that the AI systems – which are trained to use historical data labeled by human beings – that are seeded with imperfections would be perfect? Model perfection and explainability are iterative and evolving processes and we achieve it over a period. The more we pilot the solutions in the real world, learn from the real data, the more these solutions mature and perform better than humans for many use cases. Not everything in this world is expected to be done by AI algorithms. What will we do if that happens? It’s not great to be lazy all day.

Where does the industry stand today on AI interpretability and explainability?

There are two approaches to develop explainable AI systems; post-hoc and ante-hoc. Ante-hoc techniques involve seeding explainability into a model right from the beginning. Post-hoc techniques continue with the black box phenomenon, where explainability is based on various test cases and their results. Reversed Time Attention Model (RETAIN) and Bayesian deep learning (BDL) are examples of ante-hoc models. Local Interpretable Model-Agnostic Explanations (LIME) and Layer-wise Relevance Propagation (LRP) are examples of post-hoc models. The best approach is to use a combination of both to enhance the explainability of current AI systems.

How do we enhance the robustness of current AI systems?

- In traditional software systems, which are mostly rule based, we have validation processes where test cases are designed to test normal and exceptional scenarios. Since AI systems are probabilistic in nature, it is advisable to create multiple scenarios and context-based testing to detect chinks in the armor.

- Monitoring, data collection, ongoing training, and validation are required to bring more predictable and interpretable models.

- As more and more AI solutions and products hit the market, organizations need to have well-defined AI product evaluation process and integration into the mainstream. More field tests are required to assess contextual adaptation. For instance, when buying off-the-shelf bots, it is good to know what kind of data has been used for training and what new data is required to address known biases and error in judgment. It is like performing psychometric tests on humans to assess suitability while recruiting. We are hiring a bot to augment or replace a human task.

- When gathering real data from the field is a challenge, it is recommended to explore synthetic data generated with inputs from domain experts. Though it may not cover all real-world scenarios, it provides good coverage.

Conclusion

The future of AI is quite promising. It is still a new kid on the block, whom we need to feed, nurture, groom, care, motivate, and evolve. The debate on explainability and interpretability will be noisier in the coming days. It’s preferable to take a mid-path among the two schools of thought – black box vs. full explainability. Imperfection and uncertainty are the beauty of the universe and mankind can work around that. Like humans, AI will soon mature to perceive, learn, abstract, and reason. AI is here to stay and the future of AI will keep evolving.